- Topic1/3

26k Popularity

40k Popularity

4k Popularity

551 Popularity

763 Popularity

- Pin

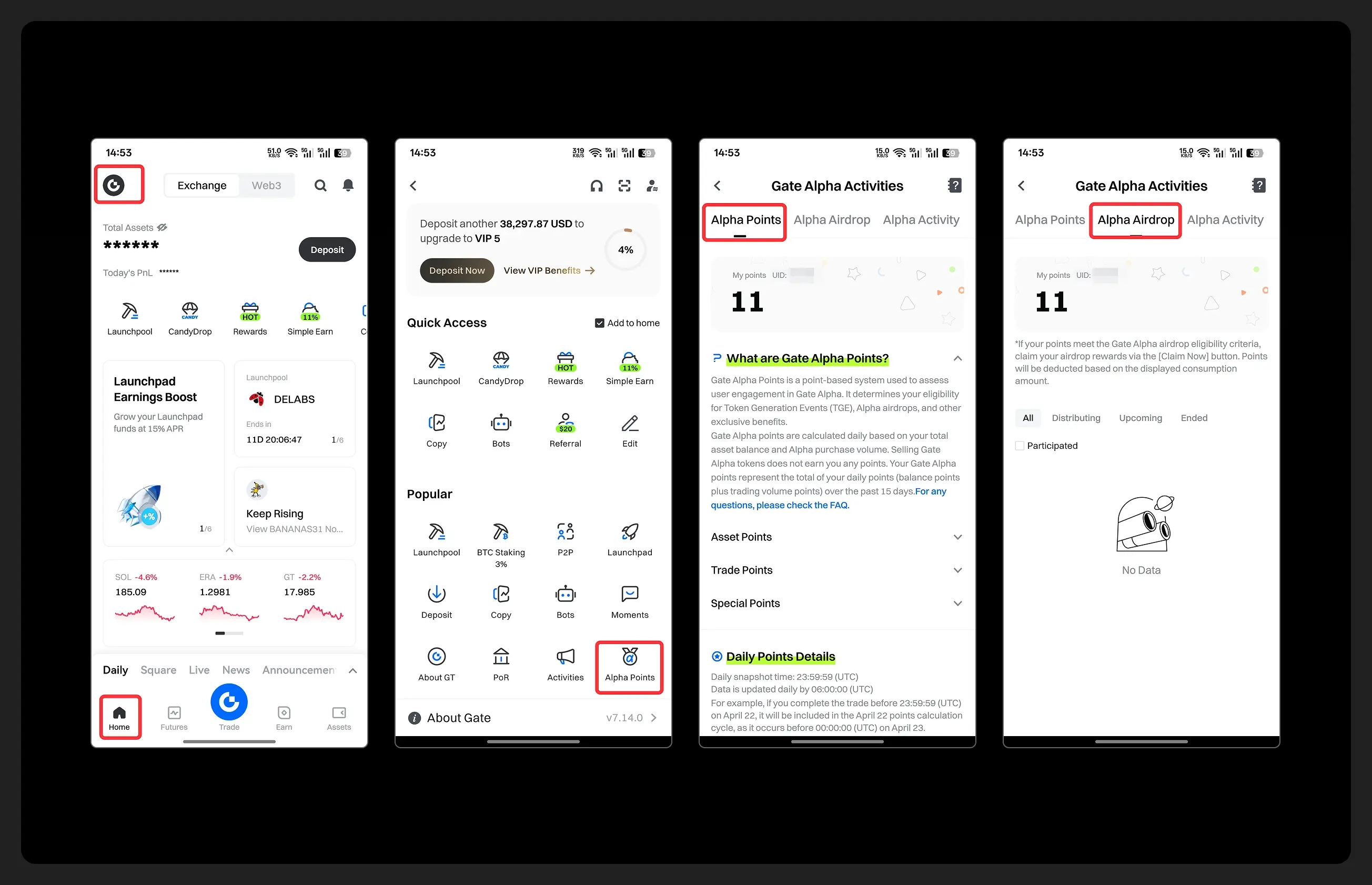

- Hey fam—did you join yesterday’s [Show Your Alpha Points] event? Still not sure how to post your screenshot? No worries, here’s a super easy guide to help you win your share of the $200 mystery box prize!

📸 posting guide:

1️⃣ Open app and tap your [Avatar] on the homepage

2️⃣ Go to [Alpha Points] in the sidebar

3️⃣ You’ll see your latest points and airdrop status on this page!

👇 Step-by-step images attached—save it for later so you can post anytime!

🎁 Post your screenshot now with #ShowMyAlphaPoints# for a chance to win a share of $200 in prizes!

⚡ Airdrop reminder: Gate Alpha ES airdrop is

- Gate Futures Trading Incentive Program is Live! Zero Barries to Share 50,000 ERA

Start trading and earn rewards — the more you trade, the more you earn!

New users enjoy a 20% bonus!

Join now:https://www.gate.com/campaigns/1692?pid=X&ch=NGhnNGTf

Event details: https://www.gate.com/announcements/article/46429

- Hey Square fam! How many Alpha points have you racked up lately?

Did you get your airdrop? We’ve also got extra perks for you on Gate Square!

🎁 Show off your Alpha points gains, and you’ll get a shot at a $200U Mystery Box reward!

🥇 1 user with the highest points screenshot → $100U Mystery Box

✨ Top 5 sharers with quality posts → $20U Mystery Box each

📍【How to Join】

1️⃣ Make a post with the hashtag #ShowMyAlphaPoints#

2️⃣ Share a screenshot of your Alpha points, plus a one-liner: “I earned ____ with Gate Alpha. So worth it!”

👉 Bonus: Share your tips for earning points, redemption experienc

- 🎉 The #CandyDrop Futures Challenge is live — join now to share a 6 BTC prize pool!

📢 Post your futures trading experience on Gate Square with the event hashtag — $25 × 20 rewards are waiting!

🎁 $500 in futures trial vouchers up for grabs — 20 standout posts will win!

📅 Event Period: August 1, 2025, 15:00 – August 15, 2025, 19:00 (UTC+8)

👉 Event Link: https://www.gate.com/candy-drop/detail/BTC-98

Dare to trade. Dare to win.

Unlimited AI Language Models: Emerging Security Threats in the encryption Industry

The Dark Side of Artificial Intelligence: The Threat of Unrestricted Language Models to the Encryption Industry

With the rapid development of artificial intelligence technology, advanced models from the GPT series to Gemini are profoundly changing our way of life. However, this technological advancement also brings potential risks, especially with the emergence of unrestricted or malicious large language models.

Unrestricted language models refer to those specifically designed or modified to bypass the built-in safety mechanisms and ethical constraints of mainstream models. Although mainstream model developers invest significant resources to prevent misuse, some individuals or organizations, driven by malicious intent, begin to seek or develop unrestricted models. This article will explore the potential threats posed by such models in the encryption industry, as well as the associated security challenges and response strategies.

The Dangers of Unrestricted Language Models

These models make it easy to implement malicious tasks that originally required specialized skills. Attackers can obtain the weights and code of open-source models, and then fine-tune them using datasets containing malicious content, thereby creating customized attack tools. This practice brings multiple risks:

Typical Unrestricted Language Model

WormGPT: Dark version of GPT

WormGPT is a malicious language model available for public sale, claiming to have no ethical constraints. It is based on an open-source model and trained on a large dataset related to malware. Its main uses include generating realistic business email intrusion attacks and phishing emails. In the encryption field, it may be used for:

DarkBERT: Dark Web Content Analysis Tool

DarkBERT is a language model trained on dark web data, originally intended to help researchers and law enforcement agencies analyze dark web activities. However, if misused, it could pose serious threats:

FraudGPT: Online Fraud Tool

FraudGPT is referred to as the upgraded version of WormGPT, with more comprehensive features. In the encryption field, it may be used for:

GhostGPT: An AI assistant without ethical constraints

GhostGPT is explicitly positioned as a chat bot without ethical restrictions. In the encryption field, it may be used for:

Venice.ai: Potential uncensored access risks

Venice.ai provides access to various language models, including some with fewer restrictions. While it aims to offer an open AI experience, it may also be subject to misuse:

Coping Strategies

In the face of the threats posed by unrestricted language models, all parties in the security ecosystem need to work together.

The emergence of unrestricted language models marks a new challenge for cybersecurity. Only through joint efforts from all parties can we effectively address these emerging threats and ensure the healthy development of the encryption industry.